Unity Imaging Collaborative

Open-access datasets, models, and code for the development and validation of AI in cardiology

Introduction

The Unity Imaging Collaborative is a group of UK cardiologists and echocardiographers who are collaborating on

- Developing and curating datasets of cardiac images for research

- Collaboratively providing expert labels and annotations of these datasets

- Developing algorithms for cardiac image interpretation

- Designing, adapting, and training neural networks for interpreting and labelling images

- Validating the results of algorithmic methods of image interpretation

So far, we have developed

- An online platform unityimaging.net to enable multiple experts to collaboratively provide labels for cardiac images.

- The largest (currently > 7500 image) open-access of expert labelled echocardiographic images for neural network development.

Open access

The datasets, code, and trained networks are available as freely-available downloads under the following licenses

- Datasets - Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International

- Trained model weights - Creative Commons Attribution 4.0 International

- Code - MIT License

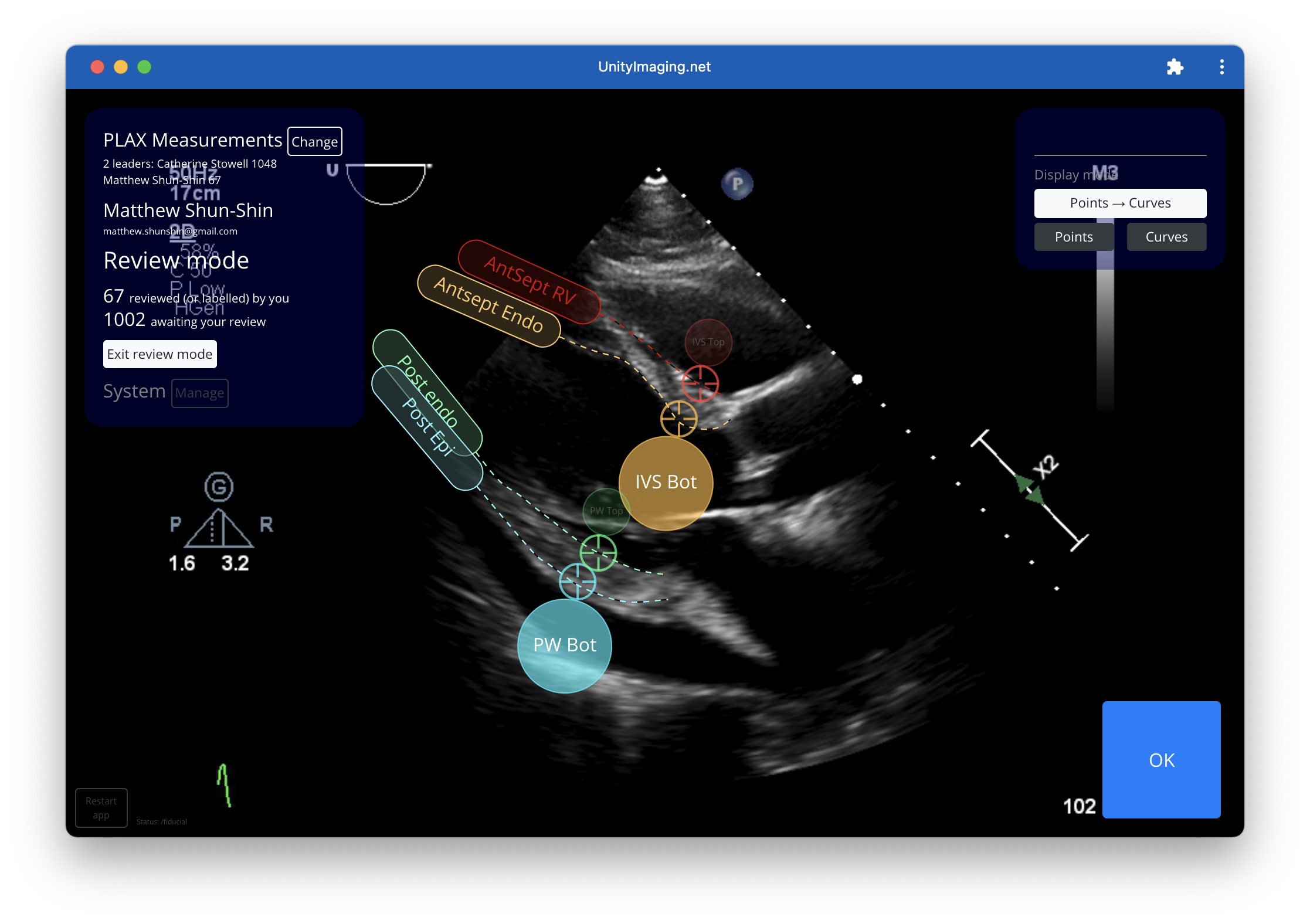

UnityImaging.net labelling platform

Installation

Please go to https://unityimaging.net using the Google Chrome browser and follow the instructions.

It is designed as an installable webapp and works using Safari (for MacOS, iPhone or iPad), or Google Chrome (for Windows, Linux or Android devices). It will auto-detect your device type and give you the relevant installation instructions. Installation should take under 10 seconds. You will need a Google account to sign-in.

Demonstration

The video below demonstrate the usage of the collaborative unityimaging.net labelling platform

Collaboration

The platform is designed to be 'self-service' - interested parties can easily start new labelling projects.

Please contact us if you would like to contribute labels or images to our echocardiography dataset, generate your own dataset, or set up your own project.

Once this overall programme of research receives funding, you will be able to create your own projects, upload data, and gather expert labels using the platform.

Datasets

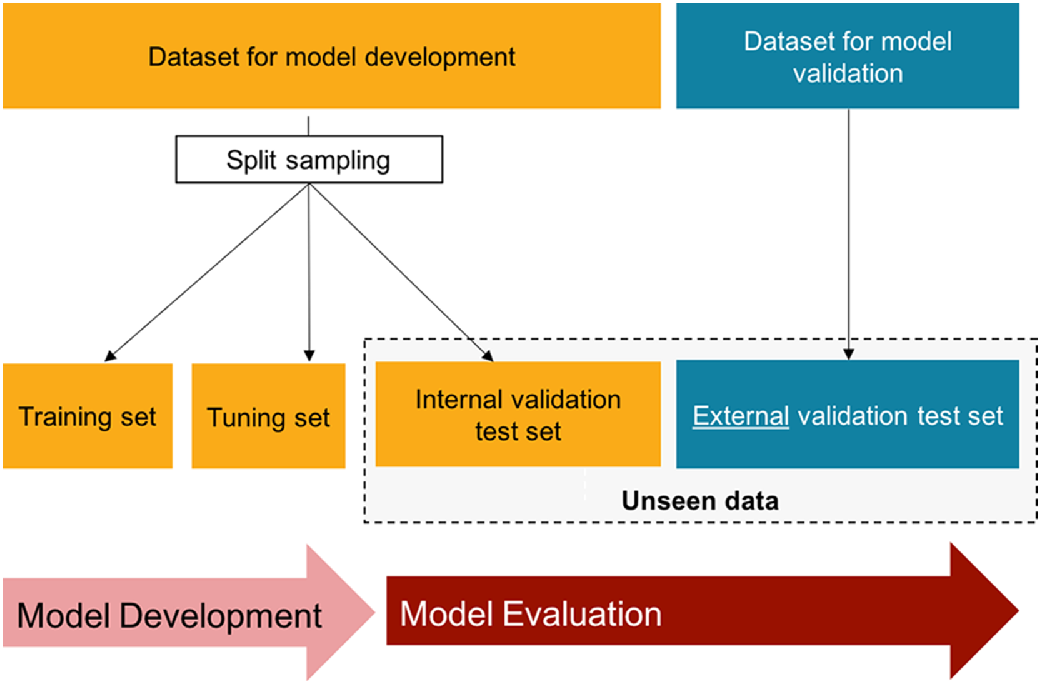

Terminology

As multiple disciplines have collaborated over the application of AI to clinical imaging, the terminology for the the subsets of the dataset has become confusing. We have tried to follow the definitions set out by Faes et al. (2020).

We use a shared dataset for model development across all Unity Imaging tasks. This allows the neural network to learn from similar tasks, reducing the number of task specific labels required.

The full shared dataset for model development is freely available, along with snapshots of the dataset used for each publication.

For each task/paper (e.g. PLAX measurements, EF, global longitudinal strain) there is a specific dataset for model validation.

Further details on the datasets for model development and validation are available here

Dataset structure

The structure of the downloadable datasets is described here

Latest dataset release [2020-12-05]

This is the latest versions of the datasets and code. They are constantly being added to. The code lives on github.

Download 2020-12-05 release:

- Unity Imaging Echocardiography Model Development Dataset Images: Download

- Unity Imaging Echocardiography Model Development Dataset Labels: Download

- Unity Imaging Code: https://github.com/UnityImaging

For reproducibliity, specific snapshots of the datasets and code used for publication are below.

Project 1: LV measurements in the PLAX view

This dataset (and associated paper) is for training a neural network to make the standard LV measurements in the PLAX view

Click here for the project page.

Project 2: Left ventricular longitudinal strain

This dataset (and associated paper under submission) is for training a neural networks to make LV longitudinal strain measurements.

Click here for the project page.

Additional Datasets

Other groups and collaborators have utilised the released Unity Imaging datasets and we have provided them with additional data.

Click here for the additional data page.

Collaborator's Publications

Other groups and collaborators have utilised the released Unity Imaging datasets and the additional data above.

Click here for the additional publications page.

Funding, support, and approvals

We are grateful to the following institutions for funding and support

- National Institute of Health Research (NIHR), who support the Clincial Lecturerships of Dr. Matthew Shun-Shin and Dr. James Howard at Imperial College.

- NIHR Imperial Biomedial Research Centre (NIHR Imperial BRC) for providing start-up funding to collect pilot data to enable project / programme grant applications.

This research and open-access release of the has been conducted under:

- The Imperial AI Echocardiography Dataset [IRAS: 279328, REC:20/SC/0386]

Contact details

Any questions Dr. Matthew Shun-Shin